Reservoir sampling: who discovered Algorithm R?

Reservoir sampling is a class of algorithms for sampling from streaming data, i.e., a sequence of data that we can access only once. Presented below is Algorithm R, the first well-known reservoir sampling algorithm.

Reservoir sampling – Algorithm R. We wish to sample $m$ elements of $L$ with equal probability. To this end, we define a size-$m$ set $R$ (“reservoir”) and initialize it by setting $R[i] = x_i$ for $0 \leq i \leq m-1$. For each index $i \geq m$, we perform a simple random sampling on the set of integers between $0$ and $i$ to choose $k$. If $0 \leq k \leq m-1$, then we set $R[k] = x_i$; otherwise, we leave $R$ unchanged. $R$ is the desired sample, once we go through all elements in $L$. $\square$

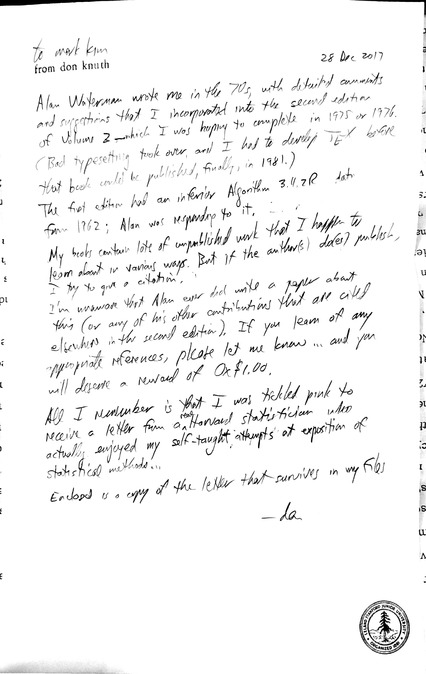

In Volume 2, Section 3.4.2 of The Art of Computer Programming, Knuth attributes Algorithm R to Alan G. Waterman. He, however, does not provide a reference, and there appears to be little information available on the matter. I sent a letter of inquiry to Knuth and received the following reply:

Alan Waterman wrote me in the 70s, with detailed comments and suggestions that I incorporated into the second edition of Volume 2—which I was happy to complete in 1975 or 1976. (Bad typesetting took over, and I had to develop $\TeX$ before that book could be published, finally, in 1981.)

The first edition had an inferior Algorithm 3.4.2R dating from 1962; Alan was responding to it.

My books contain lots of unpublished work that I happen to learn about in various ways. But if the author(s) do(es) publish, I try to give a citation.

I’m unaware that Alan ever did write a paper about this (or any of his other contributions that are cited elsewhere in the second edition). If you learn of any appropriate references, please let me know … and you will deserve a reward of 0x$1.00.

All I remember is that I was tickled pink to receive a letter from a real Harvard statistician who actually enjoyed my self-taught attempts at exposition of statistical methods…

Enclosed is a copy of the letter that survives in my files.

-don

(high-def)

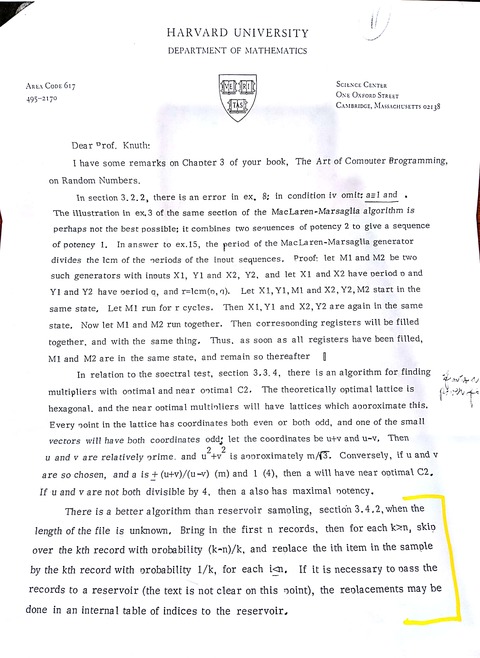

Here are the relevant sections of Waterman’s 1975 letter to Knuth:

There is a better algorithm than reservoir sampling, section 3.4.2, when the length of the file is unknown. Bring in the first $n$ records, then for each $k > n$, skip over the $k$th record with probability $(k-n)/k$, and replace the $i$th item in the sample by the $k$th record with probability $1/k$, for each $i \leq n$. If it is necessary to pass the records to a reservoir (the text is not clear on this point), the replacements may be done in an internal table of indices to the reservoir.

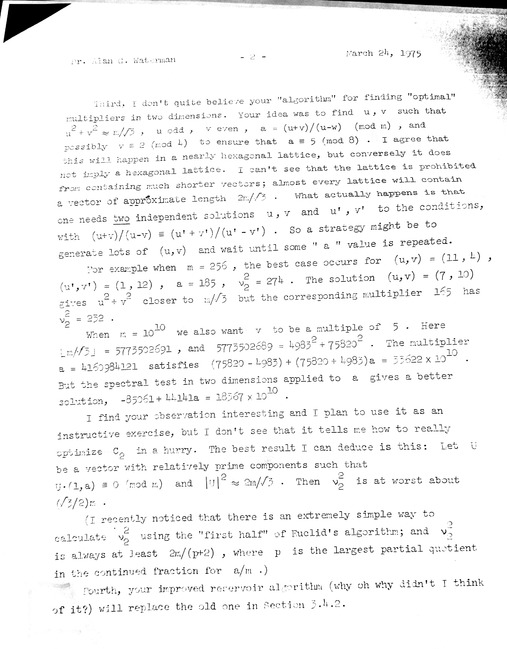

Waterman’s algorithm replaces Knuth’s own algorithm from the first edition of The Art of Computer Programming. Below, a reply from Knuth to Waterman:

Fourth, your improved reservoir algorithm (why oh why didn’t I think of it?) will replace the old one in Section 3.4.2.

(high-def)

(high-def)

All in all, Algorithm R was known to Knuth and Waterman by 1975, and to a wider audience by 1981, when the second edition of The Art of Computer Programming volume 2 was published.

Meanwhile, A. I. McLeod and D. R. Bellhouse appear to have discovered Algorithm R independently, as [McLeod–Bellhouse 1983] presents Algorithm R without citing Knuth or Waterman.

Before the publication of Waterman’s algorithm, [Fan–Muller–Rezucha 1962] described a similar, but not quite the same, algorithm:

Procedure of Method 4:

For each $t$, $t = 1, 2, \cdots, n$, obtain $r_t$, associate it with the item and its label $I_t$, and place these pieces of information in the reservoir. For each $t$, $t > n$, place $r_t$, the item, and its label $I_t$ in the reservoir if $r_t$ is equal to or less than the maximum value of $r$ of a specified subset of values of $r$ in the reservoir. The subset of values of $r$ used in the comparison consists of the $D$ smallest distinct values of $r$ in the reservoir at the time of the comparison, where $D = n$ if all the $r$’s in this comparison subset are distinct; otherwise $D < n$.

When $t = N$, i.e., all the items have been inspected, stage one is completed.

Stage 2. Search the reservoir and select the $I_t$’s associated with the subset of the $n$ smallest values of $r$ in the reservoir Difficulty can occur only when the values of the $r$’s are not distinct. In this case it is possible that there will be one or more items in the reservoir with a value of $r$ equal to the largest value, say $r_u$, of the comparison subset upon completion of stage 1. Let $L$ denote the number of items in this comparison subset upon completion of stage 1 which have values of $r$ less than $r_u$ and let $M$ be the number of items in the reservoir which have a value of $r$ equal to $r_u$. To satisfy the original requirement of obtaining a random sample of exactly $n$ distinct items it will be necessary to utilize an additional selection procedure to select $M’$ distinct items out of $M$ such that $L+M’ = n$. This selection can be accomplished by using, for example, Method 1.

While it might be reasonable to say that the reservoir algorithm paradigm was discovered by Fan, Muller, and Rezucha, it seems unlikely that they were aware of Algorithm R before Knuth published Waterman’s algorithm.

As far as I can tell, most citations of Algorithm R credit Waterman. Curiously, however, the Wikipedia article on reservoir sampling makes no mention of Waterman, crediting instead J. S. Vitter via [Vitter 1985]. But then, the Vitter paper cites Waterman:

Algorithm R (which is is [sic] a reservoir algorithm due to Alan Waterman) works as follows: When the $(t+1)$st record in the file is being processed, for $t \geq n$, the $n$ candidates form a random sample of the first $t$ records. The $(t+1)$st record has a $n/(t+1)$ chance of being in a random sample of size $n$ of the first $t+1$ records, and so it is made a candidate with probability $n/(t+1)$. The candidate it replaces is chosen randomly from the $n$ candidates. It is easy to see that the resulting set of $n$ candidates forms a random sample of the first $t+1$ records.

This is probably why high school history teachers tell their students not to use Wikipedia for their essay homework.